What is OSCAR?

OSCAR is an open-source platform to support the Functions as a Service (FaaS) computing model for file-processing applications. It can be automatically deployed on multi-Clouds in order to create highly-parallel event-driven file-processing serverless applications that execute on customized runtime environments provided by Docker containers than run on an elastic Kubernetes cluster.

Why use OSCAR for inference of AI models?

Artificial Intelligence (AI) models are used once they have been trained in order to perform the inference phase on a set of files. This requires event-driven capabilities and automated provisioning of resources in order to cope with the dynamic changes in the workload. By using auto-scaled Kubernetes clusters, OSCAR can execute the inference phase of the models for each file that is uploaded to the object storage used (e.g MinIO).

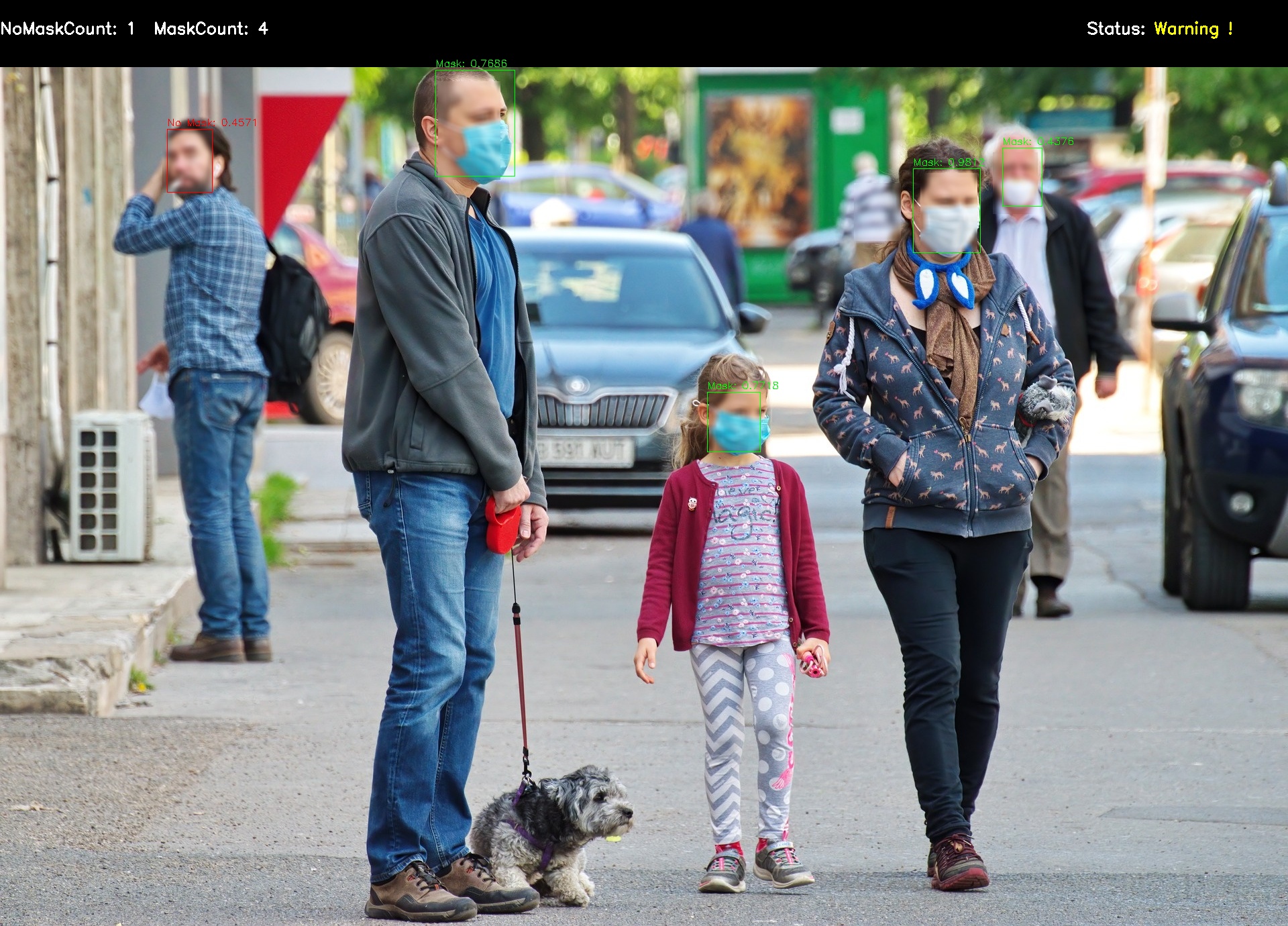

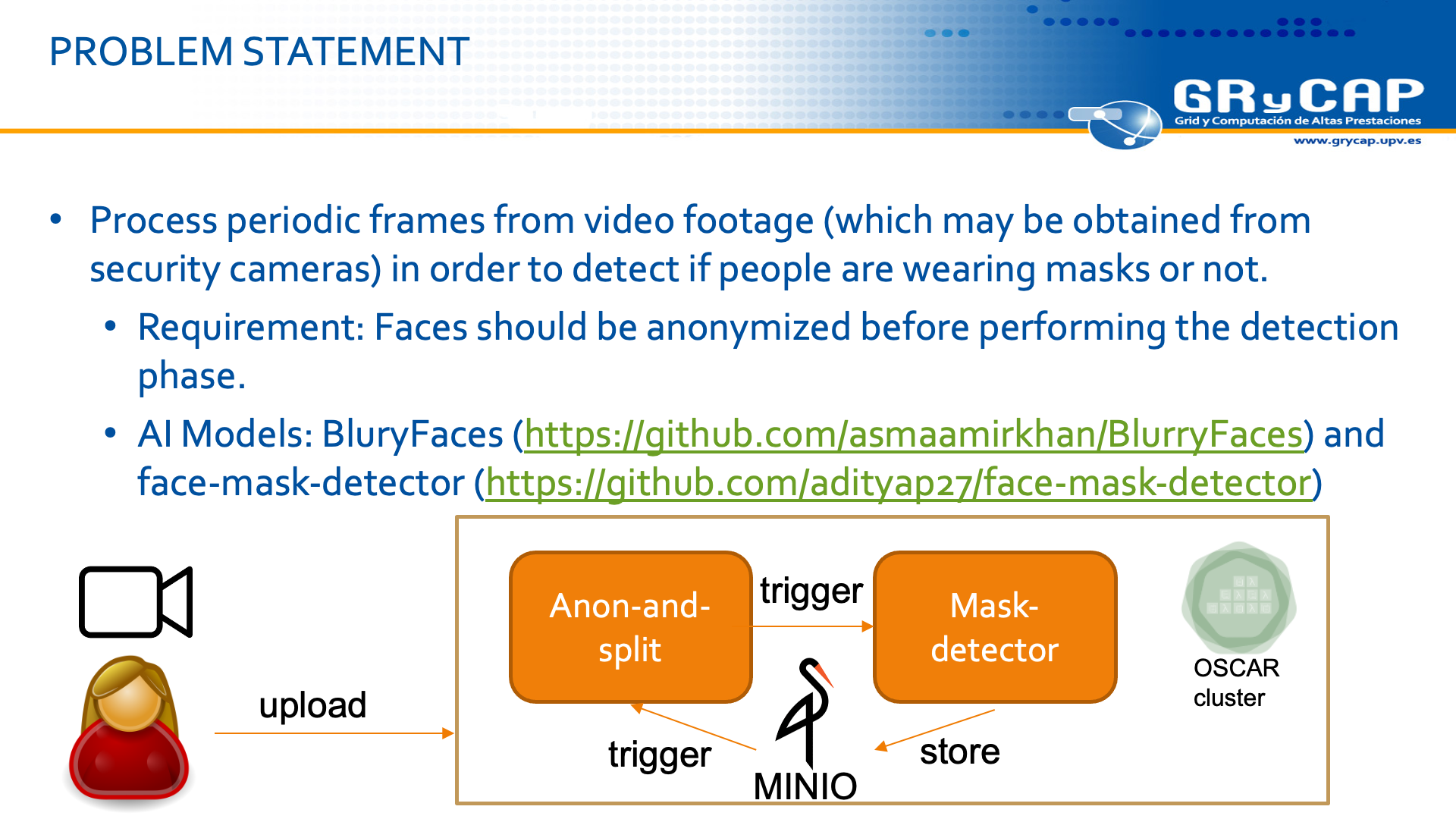

On the mask detection use case

These are the AI models employed:

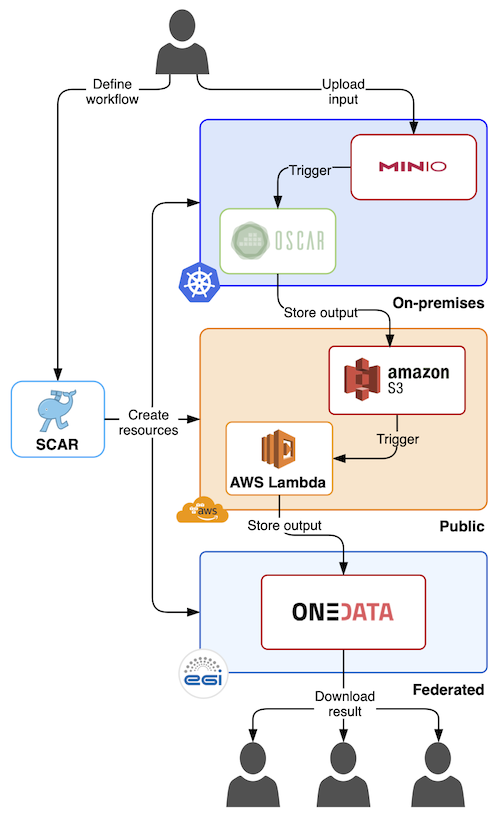

In this use case, both functions run within the same OSCAR cluster, but they could be running on different infrastructures (edge, on-premises and public Cloud), as shown in the following paper, where the SCAR tool is also employed to accelerate executions via AWS Lambda:

Risco, S., Moltó, G., Naranjo, D.M., Blanquer, I., 2021. Serverless Workflows for Containerised Applications in the Cloud Continuum. J. Grid Comput. 19, 30. https://doi.org/10.1007/s10723-021-09570-2

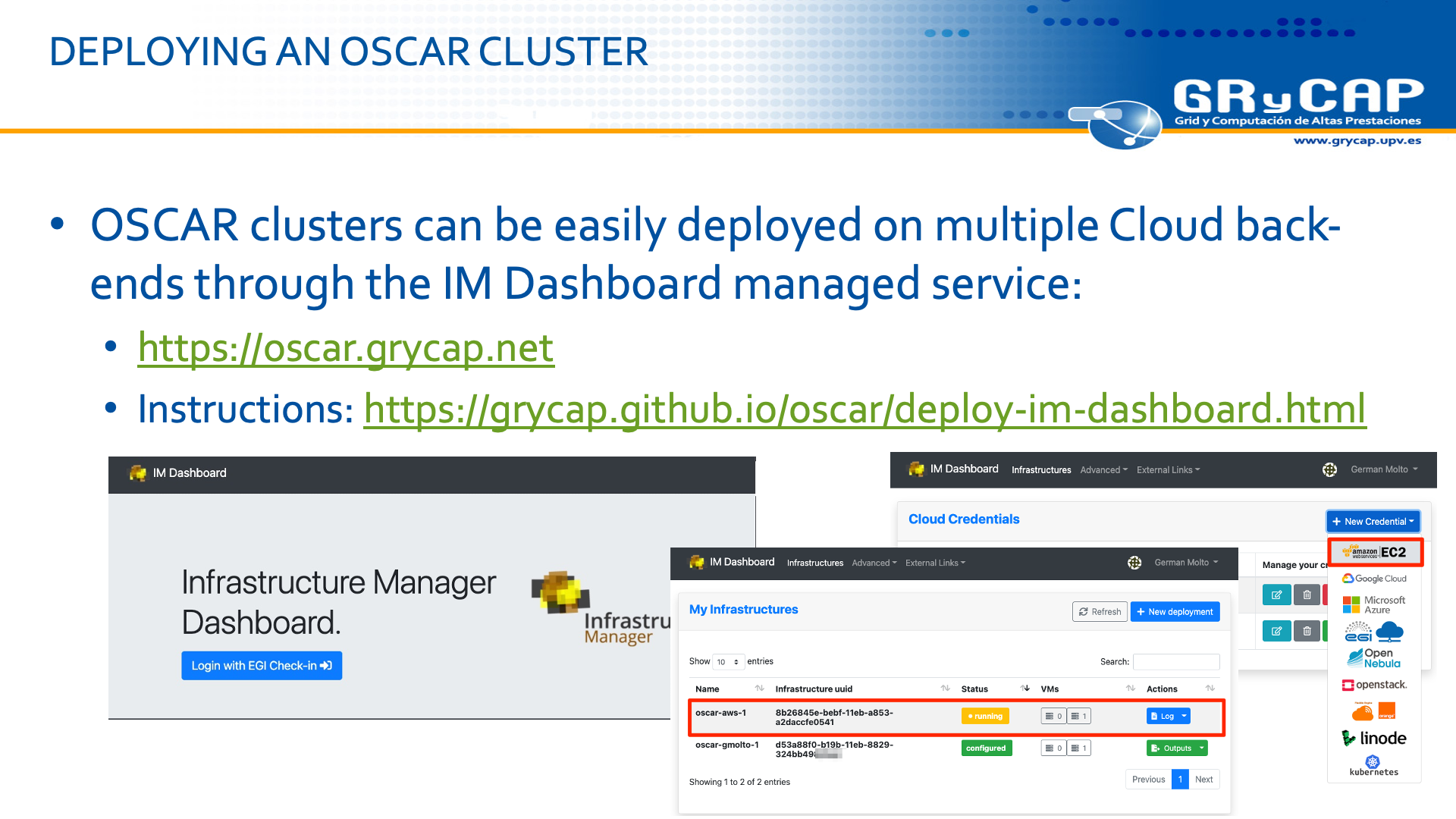

Deploying your OSCAR cluster

You can deploy your own OSCAR cluster using the IM Dashboard on your favourite cloud (Guide).

Youtube video

You can follow along the demo, from infrastructure deployment to event-driven AI inference for mask detection within an OSCAR cluster, as shown in the video: