Blog

Guide to create a service in OSCAR

This is a step by step guide to show developers how to create their first service in OSCAR.

Read more

Composing AI Inference pipelines with Node-RED & Flowfuse

In this post, we are going to learn about composing AI inference workflows by invoking OSCAR services with Node-RED & FlowFuse.

Read moreComposing AI Inference workflows based on OSCAR services with Elyra in EGI Notebooks

In this post, we will learn about composing AI inference workflows by invoking OSCAR services with Elyra.

Read more

Integration of OSCAR in AI-SPRINT Use Cases

The AI-SPRINT European Project uses the OSCAR serverless platform to support the scalable execution of the inference phase of AI models along the computing continuum in all the uses cases: personalized healthcare, maintenance & inspection and, finally, farming 4.

Read more

Data-driven Processing with dCache, Apache Nifi and OSCAR

This post describes the integration between OSCAR and dCache. Data stored in dCache triggers the invocation of an OSCAR service to perform the processing of that data file within the scalable OSCAR cluster.

Read more

Design of workflows across OSCAR services with Node-RED (Part 2).

In this post, the work with Node-RED and its interaction with OSCAR services will be continued.

Read more

Design of workflows across OSCAR services with Node-RED (Part 1).

In this post, we will be explaining the integration of Node-RED software with OSCAR. The necessary tools will be given to achieve workflows between OSCAR and Node-RED simply and intuitively through flow-based programming techniques.

Read more

Use COMPSs with OSCAR

This is a tutorial to integrate COMPSs with OSCAR. In this post, we will explain what COMPSs is and the integration with OSCAR to support parallel processing within an on-premises serverless platform.

Read more

Invoking an OSCAR Service from an EGI Jupyter Notebook

In this post, we are going to showcase the usage of the OSCAR Python API, implemented to interact with OSCAR clusters and its services through EGI Notebooks, a tool based on Jupyter for data analysis.

Read more

Why use OSCAR as a serverless AI/ML model inference platform?

What is OSCAR? OSCAR is an open-source serverless platform for event-driven data-processing containerized applications that execute on elastic Kubernetes clusters that are dynamically provisioned on multiple Clouds.

Read more

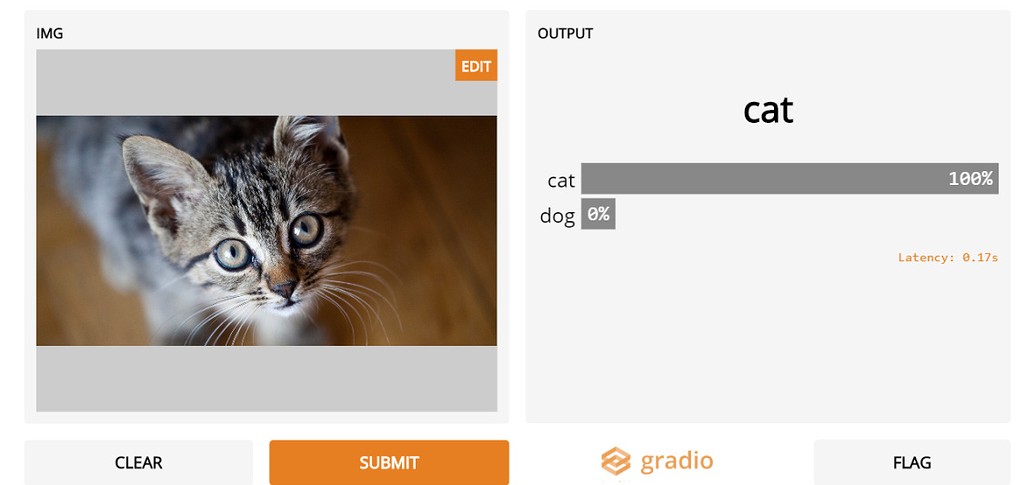

User interfaces with Gradio for AI model inference in OSCAR services

What is Gradio? Gradio is a Python library for building user web interfaces for Machine Learning (ML) applications.

Read more

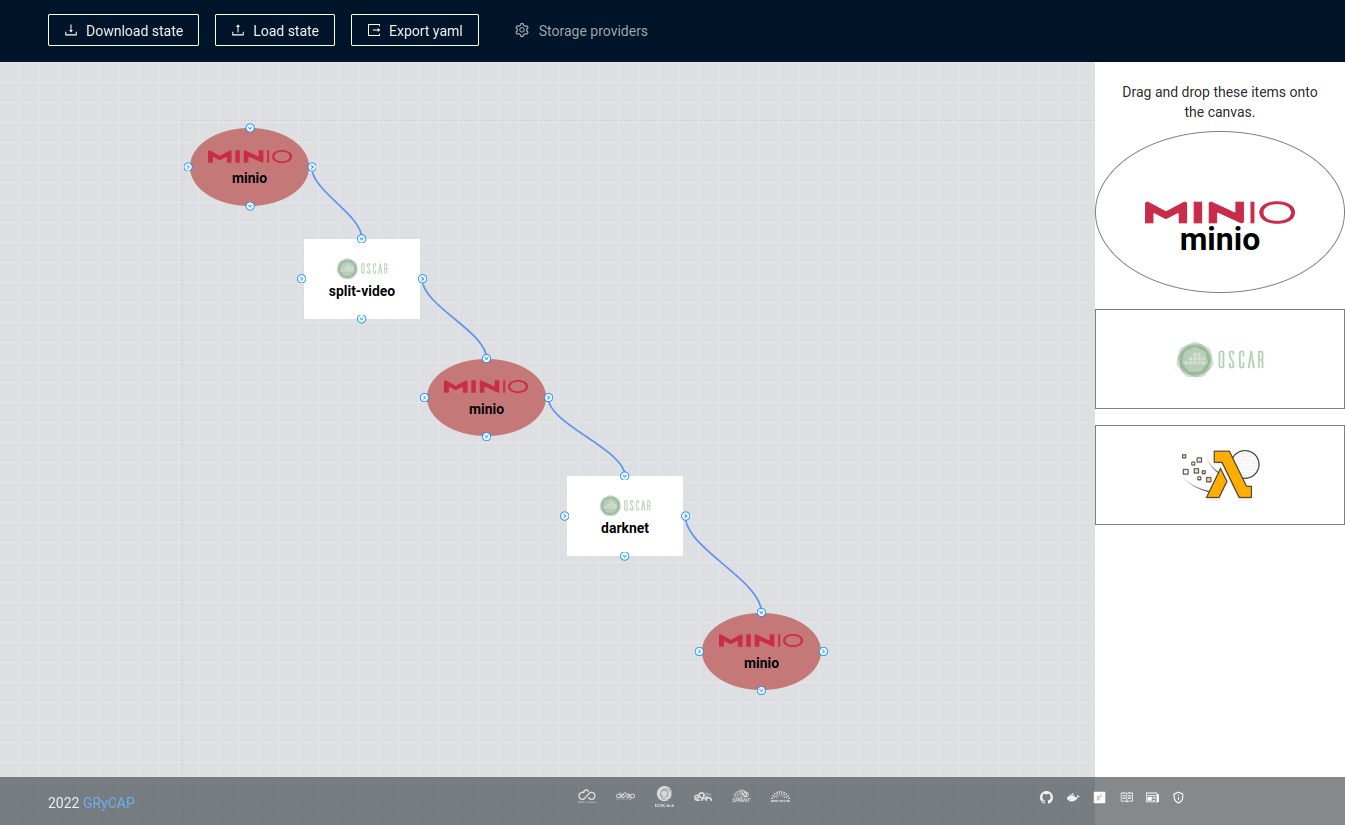

FDL Composer to create a workflow with OSCAR

FDL-Composer is a tool to visually design workflows for OSCAR and SCAR. We are going to simulate the example video-process

Read more

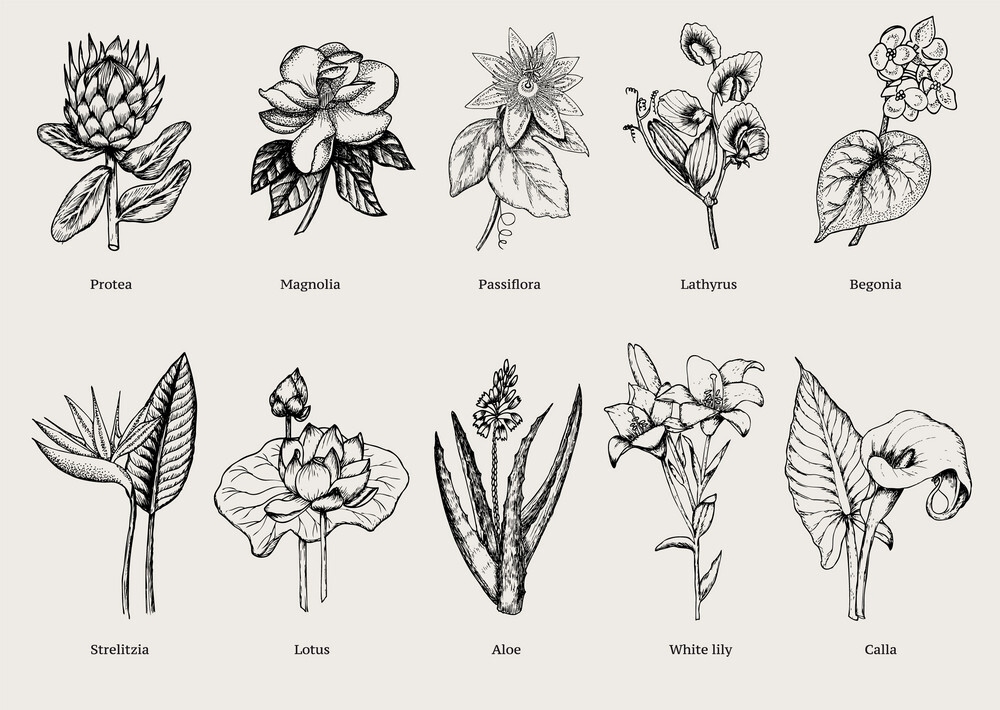

Using OSCAR as a FaaS platform for synchronous inference of a machine learning model

This guide aims to show the usage of the OSCAR platform for Machine Learning inference using a pre-trained Convolutional Neural network classification model by DEEP-Hybrid-DataCloud: the Plants species classifier, to classify plant pictures by specie.

Read more

Guide to create a service in OSCAR

This is a step by step guide to show developers how to create their first service in OSCAR.

Read more

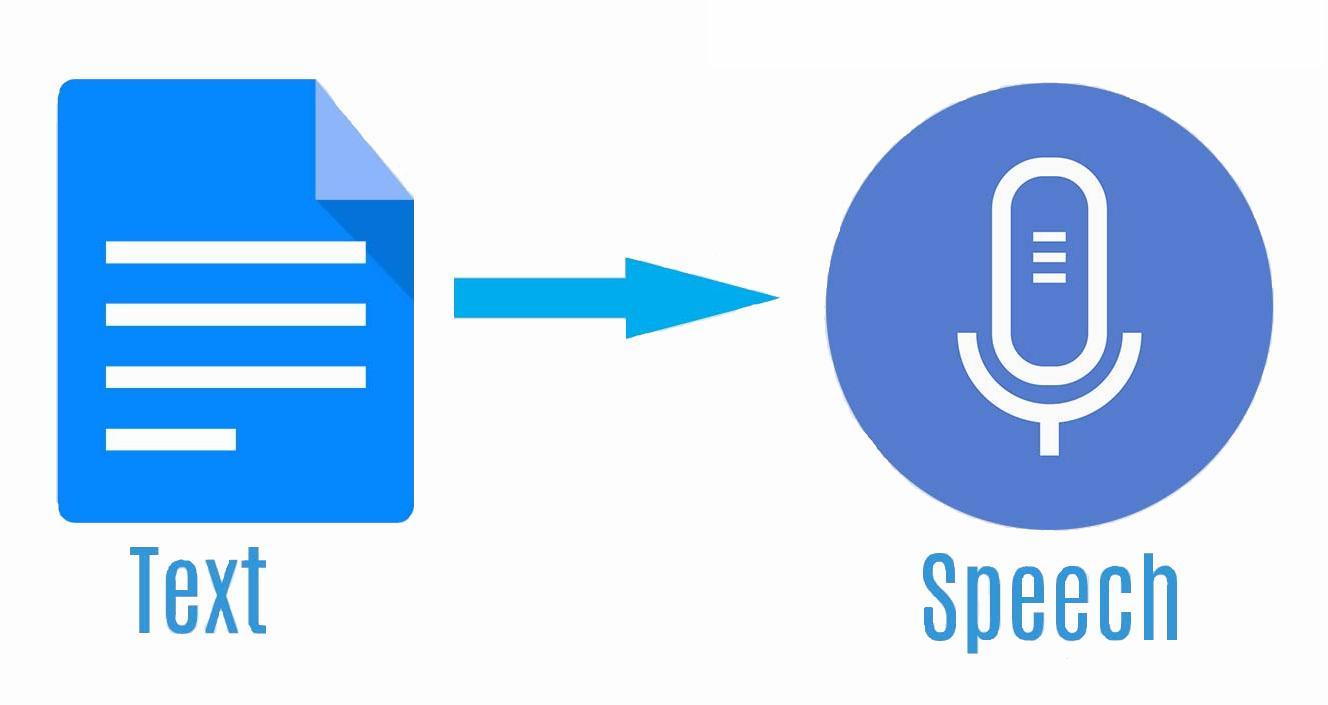

Convert Text to Speech with OSCAR

This use case implements text-to-speech transformation using the OSCAR serverless platform, where an input of plain text returns an audio file.

Read more

A Serverless Cloud-to-Edge Computing Continuum Approach for Edge AI inference

OSCAR is an open-source framework for data-processing serverless computing. Users upload files to an object storage which invokes a function responsible for processing each file.

Read more

Using OSCAR as a FaaS platform for scalable asynchronous inference of a machine learning model

OSCAR is a framework to efficiently support on-premises FaaS (Functions as a Service) for general-purpose file-processing computing applications.

Read more

Deployment of an OSCAR cluster in the EGI Federated Cloud

Here we present a step by step guide to help you deploy an OSCAR cluster in the EGI Federated Cloud, specifically in the EOSC-Synergy VO.

Read more

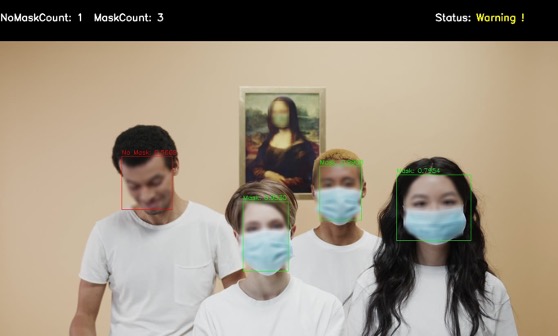

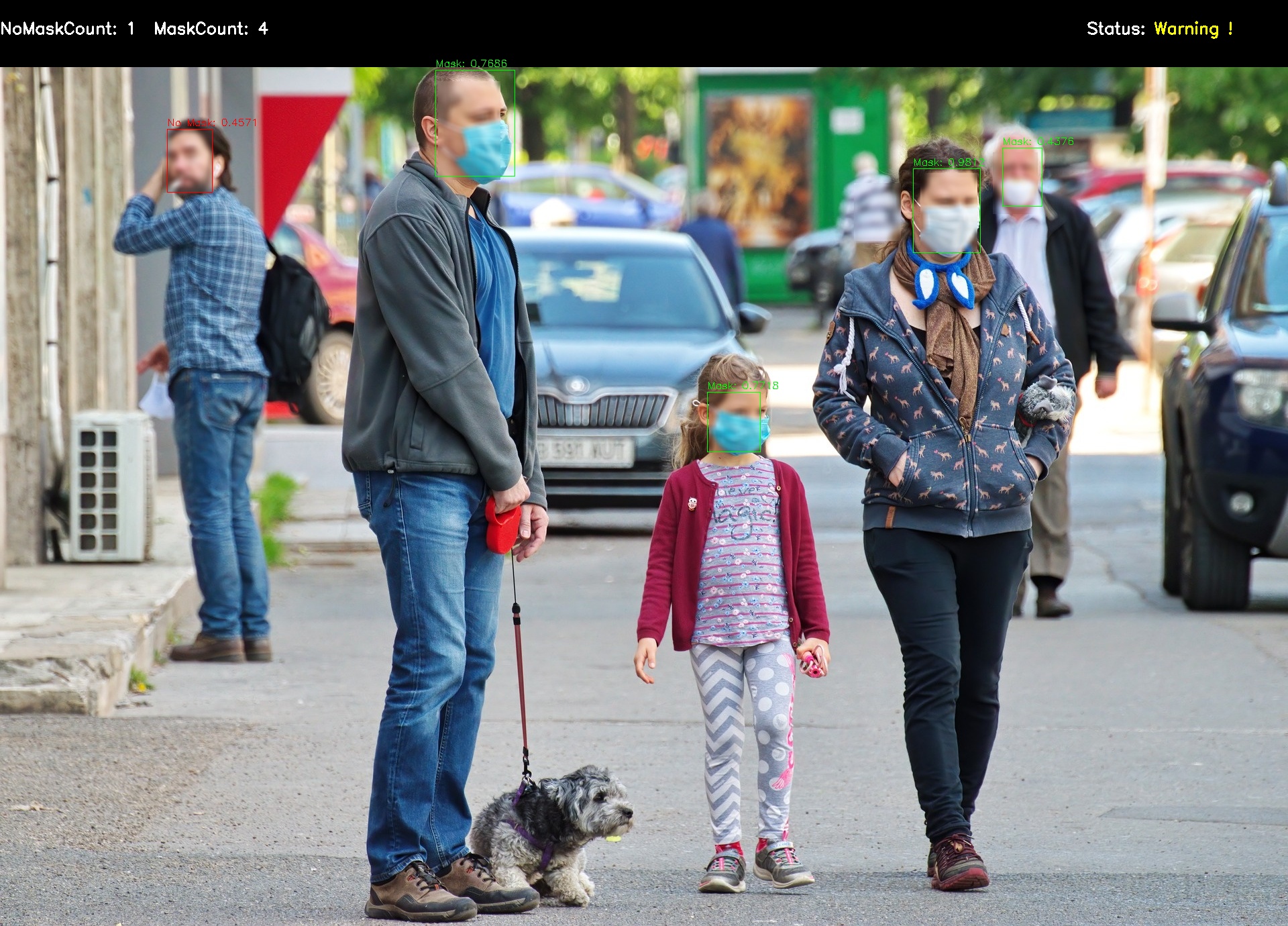

Event-driven inference of AI models for mask detection with the OSCAR serverless platform

What is OSCAR? OSCAR is an open-source platform to support the Functions as a Service (FaaS) computing model for file-processing applications.

Read more